The Path Forward for Embedded AI

Table of Contents

In April 2021, I published an article in Printed Circuit Design & Fab, where I proclaimed the future of AI is embedded. In short, my view is that embedded systems will leverage greater usage of AI on the end device, meaning they will rely less on cloud platforms or data centers for inference.

Without a doubt, I believe the future of AI is still embedded, but not with the chipset or system architecture I would have thought. At the time of writing the previous article, I was still stuck on the idea that combinational + sequential logic could conquer all computing problems. Experience has shown that it simply cannot.

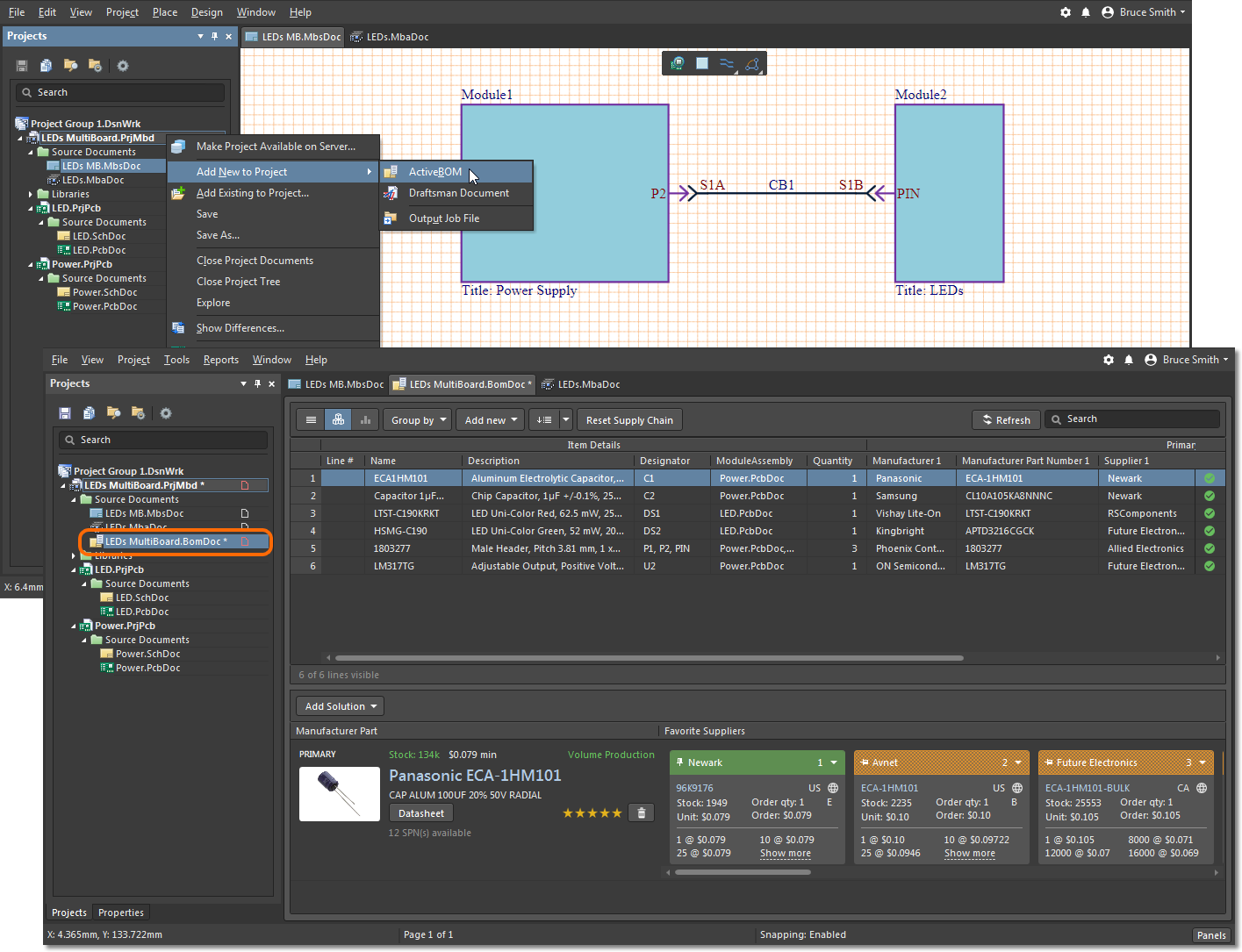

This view has been confirmed over the past 18 months, particularly given the release of many new AI accelerator chips in 2022. For PCB designers and engineers, these chips are a great option for quickly adding AI capabilities to a design over standard interfaces. Typically, these are accessed over a PCIe Gen2 or faster lane, possibly over USB, or even with something as slow as SPI for low-compute accelerators. The chips also come in standard packages (quad or BGA) that you would place and route in the usual way. To see where on-device AI has come and where it is going next, I decided to present some of the most interesting AI accelerator releases over 2022.

AI Processor Releases in 2022

Since the world has now entered the world of AI, the hardware industry has a lot of ground to make up in order to rival the software industry. Some of the newer advanced processors targeting embedded AI applications are listed in the table below.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

These startups and research institutions are driving embedded AI forward with considerable advances in their custom chips. Conversely, we have not seen much further development from established embedded AI products like Google Coral, which was one of the easy-to-use accelerator modules or chips. Aside from these specialized processors, there are two other paths designers can take to bring embedded AI capabilities into their designs.

Three Paths to Embedded AI

After examining the trends from these startups, established semiconductor manufacturers, and the open source community, there are three pathways to implement embedded AI in a new system:

Software accelerators on MCUs – The lowest compute path to accelerate AI inference, tagging/pre-processing, and training is in firmware/software. Packages like TinyML allow developers to quickly implement software-based acceleration techniques that speed up inference. These methods involve data or model manipulation in order to reduce the number of processing steps required in inference. With these techniques, developers can now run simpler inference models on small MCUs, all with simple sequential + combinational logic.

Custom silicon – There is no chance of custom SoCs going away anytime soon as all the hyperscaled tech names build out their own internal chip development capabilities. With ready-made cores from ARM and with an open standard like RISC-V, chip designers can quickly spin up a core design that implements AI compute operations in the logic level as a hardware instruction. This massively reduces power consumption and the number of raw compute operations.

FPGAs – There has been a continuous push to move FPGAs away from their historical role as chip prototyping tools and into the mainstream as production-grade processors. Semiconductor vendors are now supporting RISC-V implementation and AI acceleration to build highly customized FPGA cores with AI inference and training tasks implemented at the instruction level. All of my recent client projects for advanced applications (AI-driven imaging systems and sensor fusion) have been built around an FPGA as the main processor.

Which Way With the Industry Go?

The products listed above are all built on custom silicon. The FPGA industry does not need custom silicon specifically for AI, their customers can use development tools to implement AI at the logic level without worrying about designing logic circuits to mimic the structure of a neural network. Semiconductor vendors are seeing the value of the FPGA approach and they are providing the development tools designers need (include RISC-V resources) to implement an AI core in an FPGA.

In terms of component selection and placement, it means some systems could scale to physically smaller chips, a smaller BOM, and removal of a high-risk part of the assembly, namely the AI accelerator chip. Eliminating the AI accelerator is a great idea at all levels. For manufacturing, overall component and assembly costs will likely be reduced. For the PCB designer, you have one less chip (typically with high I/O count on a BGA) and its peripherals that you need to place and route. For the developer, it eliminates the need to develop a driver to control the external accelerator and then integrate this into the embedded application.

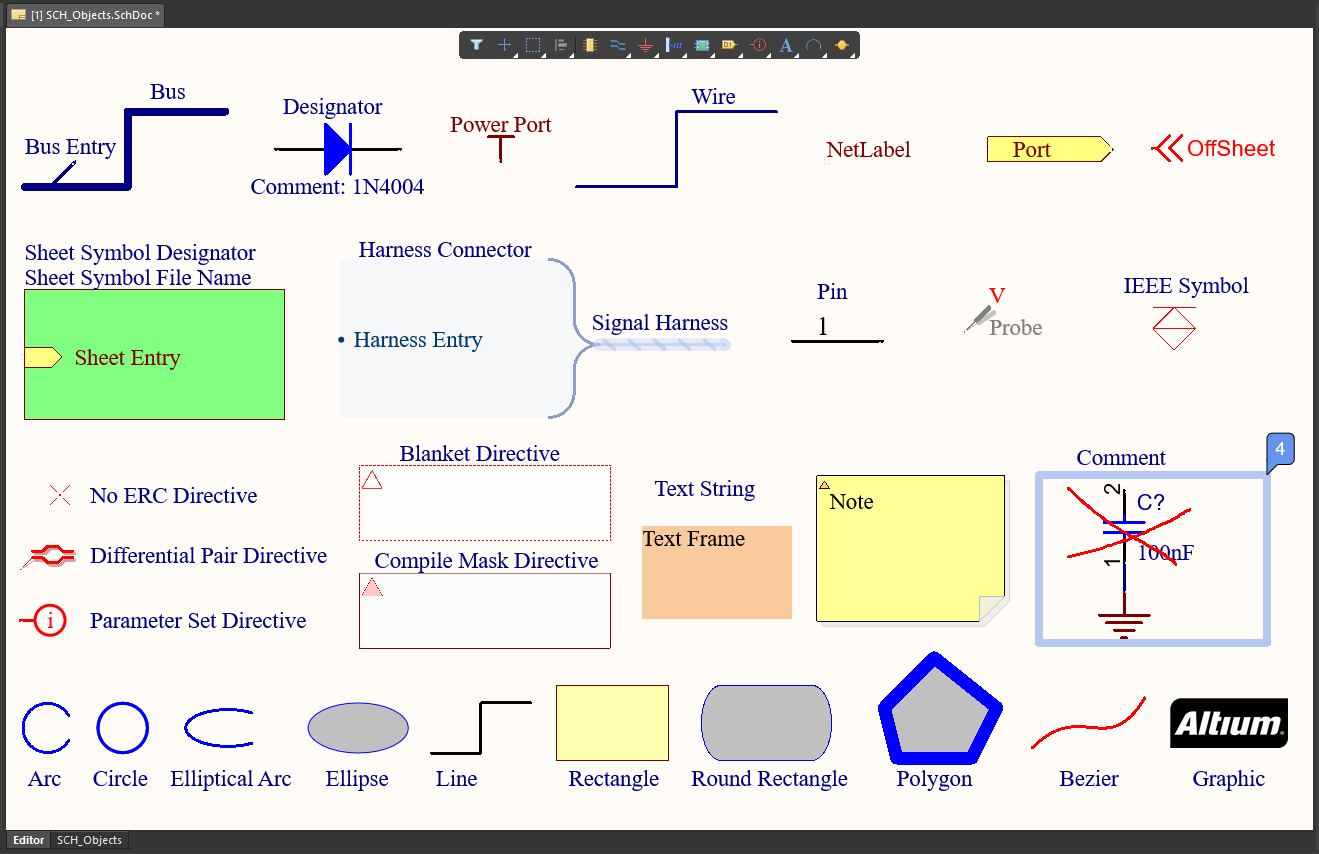

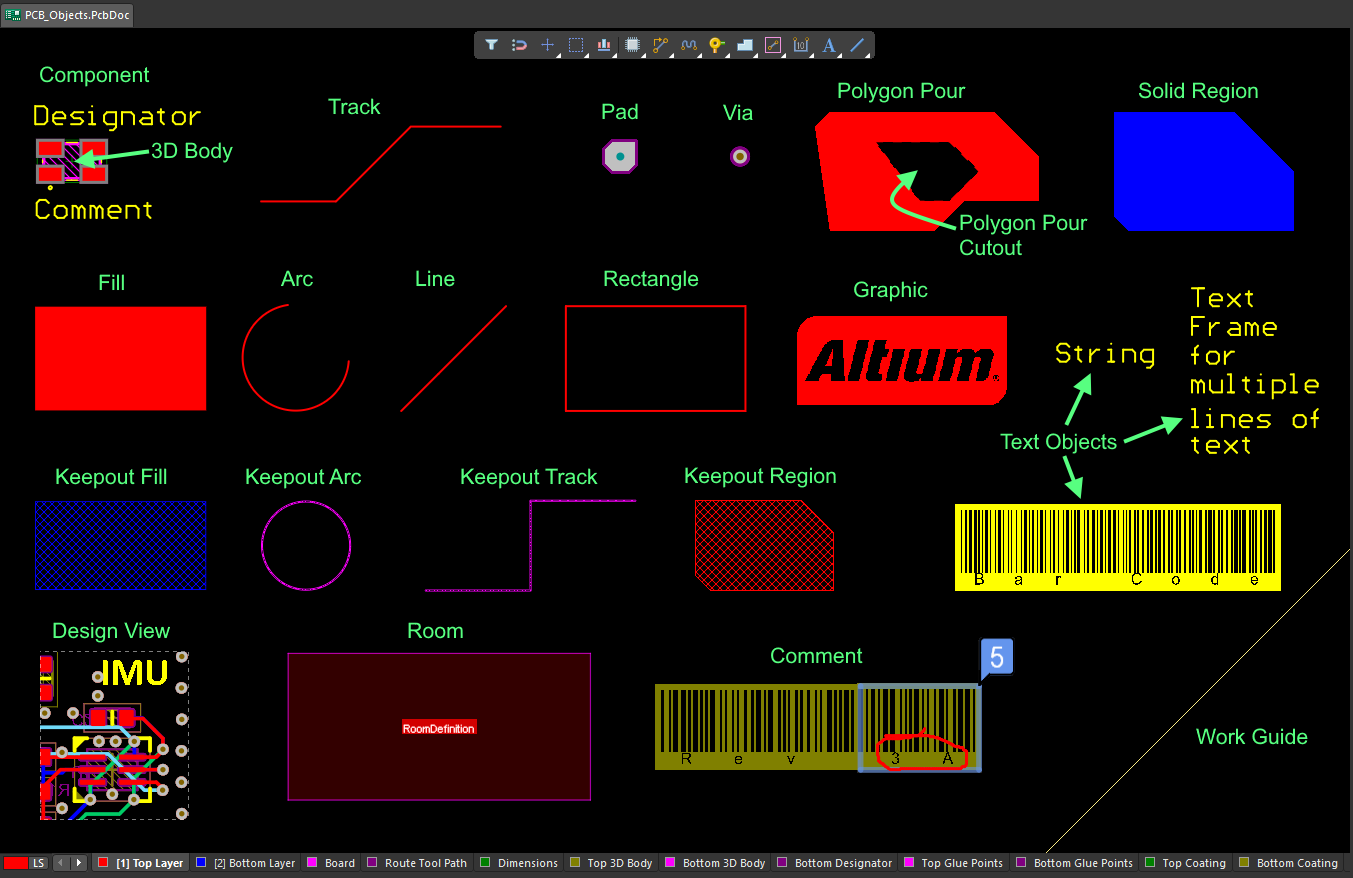

Embedded AI developers can implement these hardware-driven AI accelerator options into PCBs with the complete set of product design tools in Altium Designer®. The CAD features in Altium Designer enable all aspects of systems and product design, ranging from packaging and PCB layout, and up to harness and cable design. When you’ve finished your design, and you want to release files to your manufacturer, the Altium 365™ platform makes it easy to collaborate and share your projects.

We have only scratched the surface of what’s possible with Altium Designer on Altium 365. Start your free trial of Altium Designer + Altium 365 today.