Are We Poised on the Brink of a New Age?

As a species, we like to define things, categorize them, and stick metaphorical labels on them. In addition to helping us organize our thoughts and ideas, this allows us to construct a mental framework for how we understand the world and how we communicate with each other.

The problem is that we prefer to think in terms of crisp, sharp, black-and-white, concepts. In the real world, however, things are fuzzy and gray. Take the notion of an "Age" as it pertains to human development, for example. Until relatively recently (i.e., around 260 years ago), we might class human history as falling into the Stone Age, Bronze Age, or Iron Age. Many people are keen on assigning specific dates to the start and end of these periods, but this really isn’t possible for several reasons, such as the fact that they overlapped for a given geographical location and they occurred at different times in different locations, such as Europe, the Near East, Africa, Asia, and the Americas.

Just to increase the fun and frivolity, different people came up with their own definitions. Take Hesiod, who was a Greek poet writing around 750 BC to 650 BC, for example. Hesiod omitted the Stone Age, but added the Golden Age, Silver Age, and Heroic Age. Furthermore, he used the terms "Golden" and "Silver" to refer to a state of being as opposed to the working of these metals. Hesiod's Golden Age, for example, referred to the time when Gods and people lived together in peace and harmony and nobody had to work.

Things started to firm up a little with the Industrial Age, which is defined by the Wikipedia as: "A period of history that encompasses the changes in economic and social organization that began around 1760 in Great Britain and later in other countries, characterized chiefly by the replacement of hand tools with power-driven machines such as the power loom and the steam engine, and by the concentration of industry in large establishments."

Still later we entered the current Information Age (also known as the Computer Age, Digital Age, or New Media Age), which the Wikipedia defines as: "A historic period beginning in the 20th century and characterized by the rapid shift from traditional industry that the Industrial Revolution brought through industrialization to an economy primarily based upon information technology."

But this is where things start to get fuzzy again. I think it's fair to say that countries like the UK (where I was born) and the USA (where I currently live) are bestriding the Industrial and Information Ages, while many developing countries are still firmly rooted in Industrial Age (i.e., manufacturing) economies.

And what of the future? Will advanced technologies like 5G communications and artificial intelligence (AI) and augmented reality (AR) herald a new age? (See also What the FAQ are AI, ANNs, ML, DL, and DNNs? and What the FAQ are VR, MR, AR, DR, AV, and HR?) Personally, I think this all falls under the Information Age umbrella.

Having said this, I do think that we are poised on the brink of entering a new period we might call the Materials Age. Let's start with the fact that 118 elements have been identified thus far. Of these, some occur naturally on Earth, while others are said to be synthetic on the basis that the only time we see them is when we've created them by manipulating fundamental particles in a nuclear reactor, particle accelerator, of the explosion of an atomic bomb.

How many naturally occurring elements are there? Well, this is another area where things start to get a little "fluffy around the edges." The usual textbook answer is 91, the Wikipedia says 94, and I just ran across this article that explains how recent discoveries have raised the number to 98.

It's amazing to me that, by combining this very limited number of basic building blocks in different ways, we end up with everything we see in the universe, including life itself. What's more amazing is that we really haven’t even scratched the surface of what is possible.

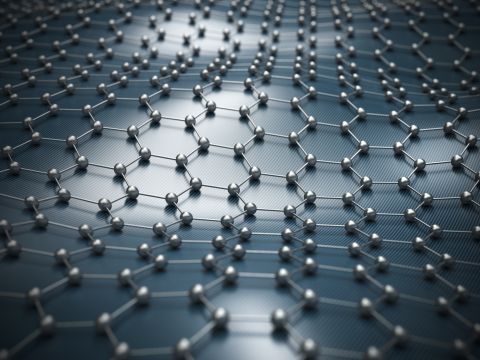

Take carbon, for example. The term allotrope refers to structurally different forms of the same element. Most people are aware that diamond is an allotrope of carbon, some may add graphite, but relatively few are aware of buckminsterfullerene (a.k.a. buckyballs), graphene, graphenylene, carbon nanotubes, carbon nanobuds, carbon nanofoam, lonsdaleite, glassy carbon, linear acetylenic carbon (carbyne), and... the list goes on.

And then there's t relatively recent discovery of something called "superatoms," which are clusters (between 8 and 100 atoms of one element) that have the amazing ability to mimic single atoms of different elements. As Sam Kean says in his book The Disappearing Spoon:

For instance, thirteen aluminium atoms grouped together in the right way do a killer bromine: the two entities are indistinguishable in chemical reactions. This happens despite the cluster being thirteen times larger than a single bromine atom and despite aluminium being nothing like the lacrimatory poison-gas staple. Other combinations of aluminium can mimic noble gases, semiconductors, bone material like calcium, or elements from pretty much any other region of the periodic table.

I have to say that, when I first read this, I was blown away. If you can use aluminum atoms to create clusters the mimic characteristics of elements spanning the periodic table, and if you can do the same thing with other elements, then the possibilities defy imagination.

I don't think a week goes by these days without news of some new material being discovered. Take superconductors, for example. When superconductivity was first discovered by the Dutch physicist Heike Kamerlingh Onnes in 1911, it was assumed that the material had to be cooled close to absolute zero; that is, 0 K or -273°C (see also What the FAQ are Kelvin, Rankine et al?). Year by year, however, new materials have been discovered that exhibit superconductivity at higher and higher temperatures.

The "Holy Grail" of this field is to find one or more room-temperature superconductors. These are defined as materials capable of exhibiting superconductivity at temperatures around 77°F, which would revolutionize electronics and power systems as we know them. Well, according to this article on Phys.org, a scientist working for the US Navy has filed for a patent on a room temperature superconductor. Even if this one doesn’t pan out, I have every faith that we will discover such a material in my lifetime.

When I was younger, a lot of science fiction stories featured newspapers with moving color photographs, but I doubted this would ever be possible; now, I'm not so sure. Electronic ink is a type of electronic paper display technology that is characterized by a wide viewing angle, extremely low power requirements, and the fact that it looks good even in full daylight, unlike regular backlit displays that wash out in bright light. Unfortunately, early electronic paper displays -- like the one used in the original Amazon Kindle -- were only black-and-white. More recently, E Ink and Wacom have unveiled a new type of color e-paper that could appear on the market as soon 2021. Furthermore, just a few days ago as I pen these words, I saw an article about a new material that will enable nano-thin, ultra-flexible flexible touchscreens that can be printed like a newspaper.

As recently as 10 February 2020, research published in the journal Nature Electronics describes how a new material based on a rare earth element called tellurium and shaped like a one-dimensional DNA helix might dramatically reduce the size of transistors. Encapsulated in a nanotube made of boron nitride, this material can be used to build a field-effect transistor with a diameter of only two nanometers.

Returning to the beginning of this column where we talked about the Stone Age, Bronze Age, and Iron Age, another way to look at this is that we have historically regarded materials to be such an important aspect of our civilizations that entire periods have been defined by the predominant material used during those times.

For most of recorded history, we've used whatever materials we happened to find lying around (I know this is a gross simplification, but you know what I mean). More recently, we have begun to develop new materials, and there's a tendency to preen ourselves and tell ourselves how clever we are, but we need to beware of hubris. "There is little left in the heavens to discover," stated Canadian American Astronomer Simon Newcomb in 1888. "The most important fundamental laws and facts of physical science have all been discovered," proclaimed American Physicist Albert Michelson in 1894. I wonder what they would say if they could see us now. Personally, I think we have only just begun to dip our toes in the "materials waters," as it were. What about you? What do you think?