Can We Engineer an Uncrashable Car?

The uncrashable car is not a distant dream. It's an engineering reality in progress. But while we're making rapid strides toward vehicles that can avoid accidents, there are fundamental challenges that still need to be solved.

For engineers working on this frontier, the integration of numerous sensor modalities, the complexities of sensor fusion, and the scalability of E/E architectures are key barriers that must be overcome. This article explores the specific difficulties and trade-offs involved in developing the perception systems that will bring the uncrashable car closer to reality.

Overview of the Current Situation: Crash Statistics and ADAS Effectiveness

Road safety continues to be a major issue, with road fatalities remaining high in both the U.S. and globally. In 2023, the European Union reported over 20,640 road deaths, while in the U.S., traffic fatalities consistently hover around 42,000 per year. Factors such as distracted driving, speeding, and impaired driving contribute heavily to these figures.

ADAS (Advanced Driver Assistance Systems) has emerged as a key technology to mitigate these risks. By assisting drivers through automation technologies like Automatic Emergency Braking (AEB) and Lane-Departure Warning (LDW), ADAS is estimated to have the potential to reduce crashes by nearly 24%, making a meaningful impact on road safety.

Current Capability of ADAS Systems

While ADAS technologies are improving vehicle safety, each system has its strengths and weaknesses. Below are some of the key capabilities, their effectiveness, and current limitations.

1. Automatic Emergency Braking (AEB)

- Effectiveness: AEB systems detect potential collisions using radar and cameras, applying the brakes if the driver does not respond in time. AEB systems designed to prevent or mitigate rear-end collisions have proven highly effective. Studies show that AEB can reduce rear-end crashes by 50%, significantly lowering injury rates.

- Limitations: AEB performance diminishes in challenging environments, such as bad weather or low-light conditions. While most systems perform well in ideal conditions, they struggle to detect pedestrians at night or in inclement weather. Moreover, false positives—where the system activates the brakes unnecessarily—can frustrate drivers, leading to disengagement.

2. Lane-Departure Warning (LDW)

- Effectiveness: LDW is designed to reduce unintended lane changes by warning drivers when they drift out of their lane. The system can prevent lane-change crashes, with a 14% reduction reported in certain cases. This is particularly useful in preventing fatigue-related accidents.

- Limitations: LDW systems rely heavily on clear lane markings. They tend to underperform on poorly marked roads, in road construction zones, or when the road is obscured by snow or rain. Additionally, driver response is critical. If the driver is incapacitated or inattentive, LDW warnings may not prevent the crash.

3. Forward-Collision Warning (FCW)

- Effectiveness: FCW provides drivers with early warnings about potential frontal collisions, giving them extra time to react. When combined with AEB, FCW systems have been shown to further reduce the risk of frontal collisions, especially at high speeds. In some studies, FCW has reduced crash rates by as much as 27% in highway settings.

- Limitations: Like AEB, FCW systems can suffer from environmental limitations. Rain, fog, or snow can interfere with sensors, reducing the system's ability to detect potential hazards.

4. Blind-Spot Detection (BSD)

- Effectiveness: BSD systems reduce the risk of lane-change accidents by warning drivers when vehicles are in their blind spot. Blind-spot detection has shown a 14% reduction in lane-change crashes. The system provides drivers with real-time information that would otherwise be difficult to detect.

- Limitations: BSD systems may struggle to detect fast-approaching vehicles or smaller objects like motorcycles, especially in poor weather. Over-reliance on the system can also lead drivers to neglect manual checks, increasing the risk if the system malfunctions.

5. Driver Monitoring Systems (DMS) and Occupant Monitoring Systems (OMS)

- Effectiveness: DMS and OMS technologies detect signs of driver distraction, drowsiness, or impairment. These systems monitor eye movement, head position, and even heart rate, alerting the driver or taking action if the driver appears incapacitated. They have significant potential in reducing crashes caused by driver inattention or fatigue.

- Limitations: Current DMS technologies face challenges in accurately detecting fatigue or distraction in real-time. False alarms may lead drivers to disable the system. Additionally, detecting impairment caused by substances like alcohol remains a technical challenge.

Discrete ADAS Sensor Modalities

A critical element in preventing vehicle crashes is the ability to continuously monitor the vehicle's surroundings. ADAS systems vehicle's combination of sensors, each with distinct capabilities and limitations:

- Radar is indispensable for detecting objects in poor weather, but it struggles with depth precision and object recognition. However, imaging radar is emerging, offering higher resolution and 3D capabilities, improving object differentiation and overall detection accuracy.

- Cameras excel at object recognition but are susceptible to failure in low light or bad weather. To address this, stereovision cameras and advanced image processing algorithms are improving depth perception and nighttime performance.

- LiDAR offers superior range and depth precision but performs poorly in heavy rain or fog. Recent advancements in solid-state LiDAR are making these sensors more affordable and durable, with improved precision for real-time mapping.

- Ultrasonic sensors are reliable in all weather but limited to short-range, low-resolution tasks like parking. Research into increasing their resolution and range could make them more versatile for urban environments.

For engineers, the challenge lies in designing systems where multiple sensors work together seamlessly, ensuring redundancy so that the vehicle can make accurate decisions even when a sensor fails or performs poorly. Integrating advancements like imaging radar, high-resolution cameras, and solid-state LiDAR will significantly improve sensor performance and bring us closer to fully autonomous, crash-free vehicles.

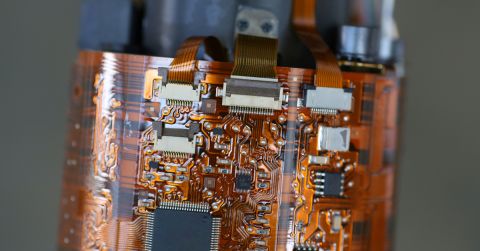

Sensor Fusion: Creating a Perception System

Sensor fusion merges data from multiple sensors to create a comprehensive environmental model. One of the most difficult decisions in ADAS design is determining when to fuse data from different sensors.

- Early fusion combines raw sensor data, providing richer input for perception algorithms but requiring more processing power and locking the system into a specific sensor set.

- Late fusion reduces computational load and offers more flexibility in sensor choice but at the cost of less comprehensive data for machine learning algorithms to analyze.

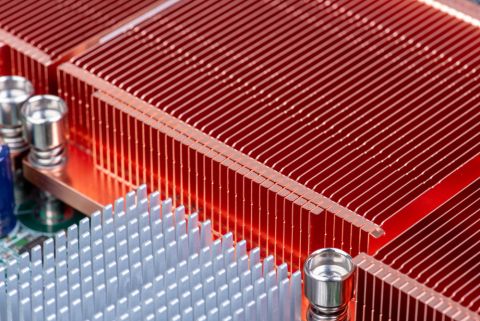

E/E Architecture: Centralized vs. Distributed Intelligence

As ADAS capabilities expand, so does the complexity of the vehicle's E/E architecture. The engineering community is currently exploring two primary approaches:

- Centralized E/E Architecture: In this model, data from all sensors is sent to a central computing unit for processing, simplifying vehicle software management but increasing wiring complexity, weight, and processing demand. This approach is ideal for premium or electric vehicles.

- Distributed Intelligence: Here, perception processing is distributed closer to the sensors, reducing the amount of data that needs to be transmitted and offering greater flexibility for scaling ADAS across different vehicle models. However, coordinating communication between distributed processors remains a challenge.

Scalability vs. Customization: Engineering for Diverse Vehicle Platforms

One significant challenge in achieving the uncrashable car is scalability. Centralized systems may be suitable for high-end, premium vehicles, but distributed systems offer greater modularity and scalability for manufacturers producing a wide range of models. Engineers must carefully balance advanced ADAS capabilities with the constraints of cost, power consumption, and weight.

How Close Are We to the Uncrashable Car?

Although ADAS technologies have made significant strides in reducing crash rates, we are still some distance away from realizing the uncrashable car. Key barriers remain:

- Sensor Limitations: Current sensor modalities (e.g., radar, cameras, LiDAR) struggle with adverse weather and lighting conditions, meaning systems cannot yet perform reliably in all environments.

- Driver Interaction: ADAS systems still rely heavily on driver engagement. Technologies like FCW and LDW are ineffective if drivers fail to respond to warnings. Fully autonomous systems, which can operate without human input, are not yet widely available.

- E/E Architecture Complexity: Scaling centralized or distributed intelligence architectures across various vehicle models without escalating costs or complexity remains a challenge.

- Regulation and Standardization: There is a lack of global regulatory standards governing ADAS performance and data-sharing across platforms, which hinders the widespread adoption of these technologies.

As advances in AI, sensor technology, and vehicle architecture continue, engineers are gradually overcoming these obstacles. However, the vision of the uncrashable car will require not only technological breakthroughs but also a comprehensive rethinking of vehicle architectures and infrastructure. While full realization is still years away, each incremental improvement brings us closer to a safer, autonomous future on the road.