Components for Machine Vision System Design

Smart cameras, computer vision, machine vision… no matter what you call it, vision applications are making their way into more products. The classic application most PCB designers are familiar with is manufacturing, and more recently with facial recognition on your smartphone. The in-the-know designers that regularly work with machine vision designs and systems are seeing many unique applications in medicine, automotive, robotics, aerospace, security, and certainly in other areas.

One interesting thing that happens in the electronics industry is the ease with which new technology can be integrated into new systems as it continues to proliferate. Machine vision has certainly crossed the threshold to the point where standardization, developer support, and a wealth of components are available to designers that want to innovate in this area. Today, there are several popular hardware platforms that are an entry-level option for newer designers. If you’re beyond prototyping and you’re preparing production-grade hardware, newer processors are enabling more highly integrated systems that leverage advanced technologies like machine learning.

In this guide, we’ll explore some of the components needed to build machine vision systems and the resources available to embedded developers. Machine vision systems have an important hardware and mechanical engineering element as we’re dealing with cameras and optics. The development of these systems is very rich, with tasks spanning multiple disciplines. Fortunately, component manufacturers and open-source developers have access to many resources to help expedite hardware design and systems development, and interested developers are encouraged to look at some of the examples we’ll show here to get started.

Machine Vision System Architecture

In terms of the electronics, machine vision systems have a somewhat generic architecture compared to other systems. The block diagram shown below illustrates a generic high-level architecture that is implemented in machine vision systems. These systems can have varying levels of integration and form factors, with some of the blocks shown below possibly integrated into a more powerful processor.

High-level machine vision system architecture

There is no quintessential machine vision system that can be used as a reference for other designs as machine vision is a capability, not a product or specific type of design. Machine vision systems have common characteristics as shown in the block diagram above, but they are implemented with different component sets. Rather than throwing out lists of components to support machine vision systems, we need to start at the processor level to see how image processing algorithms can be implemented efficiently.

FPGA, MPU, or GPU?

MCUs or MCU-based SoCs have always been popular, even for systems implementing machine vision capabilities. MCUs with standard digital interfaces can interface with more specialized ASICs for vision processing, while FPGAs can be large enough to implement inline image acquisition, DSP functions, and other system-level processing. GPUs can provide a dedicated external vision processor that supplies data back to the host controller.

In terms of the level of advancement, the MPU + FPGA or MPU + GPU architectures are preferable for near-real-time machine vision. They are also preferable for processing higher resolution images as the FPGA/GPU can implement parallelization, which cuts down processing time when high resolution/high color depth processing is required. This is the major advantage of FPGA as the logic is flexible, yet management and algorithms for advanced processing are more difficult to develop without prior experience.

All of this isn’t to say that MCUs and MPUs can’t be used as host controllers in machine vision applications. For example, your phone and computer aren’t using these and they can perform some simpler image processing tasks from low-frame-rate video and static images. To be fair, the CPU/SoC in those devices tends to have much more compute than a high-end MPU. An MCU could be used for lower resolution, lower frame rate, lower color depth, or monochrome image processing, and as you add more compute you can handle more detailed images. MPUs are a better option in production as you might need to access external high-speed memory, which means you’ll need a DDR RAM interface and possibly PCIe for some peripherals, something you won’t typically find on a slower MCU.

Application-Specific vs. Generalized

If you want to implement more advanced image recognition and segmentation algorithms in your system, then you might opt for an SOM, COM, or single-board computer as the main controller for your machine vision application. If a processor has enough on-chip memory and external RAM, it can typically implement most acquisition and processing tasks while also running an application to control the rest of your system. These architectures are often used for threshold-based image segmentation and object detection tasks in very specific applications from low to moderate frame rate video data.

Challenges in Machine Vision Design

Like many other designs that essentially operate with low-level analog signals, machine vision systems and designs have their own set of electrical challenges that need to be considered. Some of the solutions lie at the image processing level, others are at the system level and relate to component selection. Here are some of the major challenges designers must consider in machine vision design:

Heat management: Board-mounted high-resolution cameras in more compact systems can receive a lot of heat during operation, which might require heat sinking or airflow through the enclosure to stay cool. Systems with smaller processors and larger boards can usually stay cool on their own, but smaller boards with faster processors might require an interface material bonded to the enclosure to remove heat.

EMI/EMC: Because machine vision systems are generally mixed-signal systems, they require proper layout to prevent interference between digital components and the output from a CMOS sensor or CCD. CMOS might be a better option as data conversion is done at the chip level. To ensure accurate image recovery in compact systems with fast processing, make sure you implement some best practices for EMI suppression.

Power integrity: Vision systems and HMI systems can experience power integrity problems if the layout is not constructed properly, even in the case when the design is not running with high speed components or protocols. This might sound like a problem that can be filtered away, but this is not the case. Instead, the solution can be as simple as ensuring a ground plane is placed for routing all digital signals coming onto the host board.

Mounting optics: Vision systems require optics to define object and image planes, and to form clear images on the imaging sensor so data acquisition can occur. Modern machine vision systems need a stable mount with low hysteresis and possibly automated focusing with integrated motor control. This is a mechanical design challenge as well as an electrical design challenge, where the latter involves power draw in a motor control and timing circuit.

Components You’ll Need

Obviously, we can’t show every possible component in this article as it would simply be too long. What we can do is give you a good list of components you can use to get started building different portions of your system. In the list below, I’ve included some example components from each portion of a new system you can use to get started:

MCUs like STM32 and MSP430 are a great option for running a lightweight machine vision system and implementing some simpler AI inference tasks.

For something with much more compute, the Zynq FPGA platform is a great option for machine vision systems. Xilinx also offers a lot of developer support for machine vision systems built on Zynq.

CMOS image sensors and CCD sensors can be either monochrome or color, and they come in a range of resolutions. Something like the MT9P031I12STC-DR1 from ON Semiconductor is a good example of a color CMOS sensor for high-resolution machine vision applications.

For developing a high-level application, OpenCV is an excellent set of open source libraries that includes many of the standard image processing functions used in machine vision systems. There are other open source libraries online that can be used for more specialized application development.

I would recommend a GPU or FPGA for more generalized tasks like image recognition, segmentation, and object identification with tracking in video data without applying application-specific pre-processing (i.e., from raw images). I mention this here because we need to consider how to interface with the image sensor, and the sensor needs to be matched up to the processor. In an FPGA, the acquisition logic and subsequent processing can implemented in parallel alongside the rest of the logic for the application.

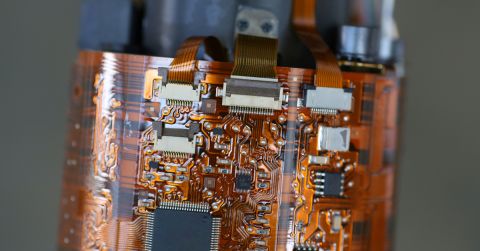

One popular open hardware platform that can be used for system development is OpenMV. These camera modules incorporate logic for image acquisition, hardware for mounting optical elements, and a PCB for ensuring EMI/EMC and SI/PI. They are also compatible with STM32 MCUs and other platforms. This is a good system for application developers that want to use an external processor as the host controller for a larger application in the system, but without getting bogged down in the hardware development and debugging side.

M7 camera module (left) and FLIR Lepton Adapter module (right).

Integrating Machine Vision Designs with the Cloud

Machine vision is highly useful in all its forms, and we shouldn’t be surprised that advanced MCU, MPU, or FPGA-based solutions should be integrated with the cloud. Depending on how cloud compute is leveraged, you might best offload processing to the data center rather than perform it at the edge, so an MCU or lightweight MPU could be used. When fast processing is required in the field, processing tasks should be performed in the field with a more powerful host controller (FPGA, MPU + FPGA, or MPU + GPU). Again, the choice depends on the amount of preprocessing you perform on the device to clean up and compress images before sending them into the cloud for processing.

For AI/ML applications involving machine vision, some companies provide SoCs and ASICs as a complement to slower processors or as add-on modules for SBCs. Google Coral is one of these options, acting as a dedicated processor for running TensorFlow Lite models involving inference from image data. Other options are coming onto the market from startups that are targeting AI inference for multiple video streams, ideally replacing GPUs with smaller packages.

Machine vision systems need to do much more than just capture and process images. Today’s systems are connected, they interface with multiple sensors, Some other components you might need for your machine vision system include:

Machine vision systems design requires expertise in multiple areas and collaboration with a team, but component selection for these systems shouldn’t be difficult. When you need to find components for your machine vision application, use the advanced search and filtration features in Octopart. When you use Octopart’s electronics search engine, you’ll have access to up-to-date distributor pricing data, parts inventory, and parts specifications, and it’s all freely accessible in a user-friendly interface. Take a look at our integrated circuits page to find the components you need.

Stay up-to-date with our latest articles by signing up for our newsletter.