Building a Smarter Future: Integrating AI into Embedded Systems

Artificial intelligence (AI) has made its way from science fiction into everyday life, revolutionizing industries ranging from healthcare to automotive. AI-powered devices are now an integral part of our lives, driving innovations like smart homes, autonomous vehicles, and personal assistants. However, while AI opens doors to new capabilities, integrating these sophisticated algorithms into embedded systems—small, resource-constrained devices—presents a significant challenge for engineers.

Embedded systems often operate under strict power, processing, and space limitations, making it difficult to implement computationally demanding AI algorithms without sacrificing performance or energy efficiency. In this article, we’ll explore how engineers are overcoming these challenges by leveraging cutting-edge hardware design techniques and optimizing AI algorithms for embedded applications. We will dive into strategies for handling computational load, explore custom hardware accelerators, and discuss future trends in AI for embedded systems.

The Growing Complexity of AI in Embedded Systems

As AI algorithms have advanced, they have become more computationally intensive. For instance, modern AI tasks like object recognition require over 100 times more operations than they did just five years ago. While these advancements unlock groundbreaking capabilities—such as real-time image processing or voice recognition—they also put tremendous strain on embedded systems, which are typically designed to perform well within tight power and performance budgets.

To make matters more challenging, the rapid development of AI algorithms has outpaced the growth of processor and silicon technologies. In other words, while AI software has evolved exponentially, the hardware that powers it hasn’t kept up at the same pace. As a result, embedded systems engineers are increasingly tasked with optimizing performance on hardware that was not originally designed for the complexities of modern AI workloads. This imbalance calls for innovative solutions to ensure that embedded systems can keep up without becoming inefficient or energy-draining.

Managing the Computational Load: Offloading vs. On-Device Processing

One of the first critical decisions engineers face when integrating AI into an embedded system is how to manage the computational load. Essentially, they need to decide whether to process AI workloads locally on the device or offload the computation to a more powerful, cloud-based system.

Offloading AI Processing

In some cases, it makes sense to offload AI computations to a remote server or cloud data center. This is particularly effective for devices that do not require real-time processing or where communication latency is acceptable. For example, a smart thermostat might collect temperature and sensor data locally, but the actual AI-based processing (like predicting optimal temperature settings based on usage patterns) is done in the cloud. This offloading can significantly reduce the burden on the embedded system’s processor, freeing it to handle more basic tasks like sensor monitoring or communication.

The key benefit here is that the cloud provides nearly unlimited computational resources, allowing AI algorithms to run without the constraints of local hardware. As long as communication overhead remains manageable and the system doesn’t have strict real-time or low-latency requirements, offloading is an efficient way to implement AI functionality.

The Need for On-Device Processing

However, not all applications can rely on the cloud. Devices with real-time processing needs, such as autonomous vehicles, drones, or industrial robots, cannot afford the delays caused by sending data back and forth to the cloud. These systems require immediate AI-driven decisions, such as obstacle detection or motion control, which must be processed locally to ensure timely reactions.

In addition, some applications must handle sensitive data—such as medical devices or security cameras—where transmitting information to the cloud presents privacy and security concerns. In these cases, AI algorithms must be processed directly on the device to ensure data integrity and compliance with privacy regulations.

To meet the performance requirements of real-time applications, embedded systems must be equipped with more advanced processing capabilities. This leads engineers to explore various hardware acceleration techniques, which help boost the computational power of embedded devices without significantly increasing energy consumption or size.

Hardware Acceleration: From General-Purpose CPUs to Custom AI Solutions

General-purpose processors, such as microcontrollers or system-on-chips (SoCs), can handle basic AI workloads to a limited extent. These processors often include some optimization features, like vectorized instructions, that help improve their ability to perform mathematical operations efficiently. However, their serial nature restricts their performance when handling the increasingly complex parallel operations required by modern AI algorithms.

GPUs and NPUs: Boosting AI Performance

To overcome these limitations, engineers frequently turn to hardware accelerators such as Graphics Processing Units (GPUs) and Neural Processing Units (NPUs). GPUs are well-suited for AI tasks because of their ability to process data in parallel. They can handle large arrays of data simultaneously, making them an excellent option for processing high volumes of information, such as images or sensor inputs.

NPUs, on the other hand, are even more specialized. These processors, also known as Tensor Processing Units (TPUs), are designed specifically for deep learning and AI applications, providing higher performance for neural network computations. NPUs accelerate the matrix multiplications and convolutions central to AI algorithms, resulting in faster processing and lower power consumption compared to general-purpose CPUs.

While GPUs and NPUs provide a substantial boost in performance, they come with trade-offs. These processors are often power-hungry and physically large, making them unsuitable for smaller embedded or edge devices that have tight power and space constraints.

Custom AI Accelerators: Tailored for Efficiency

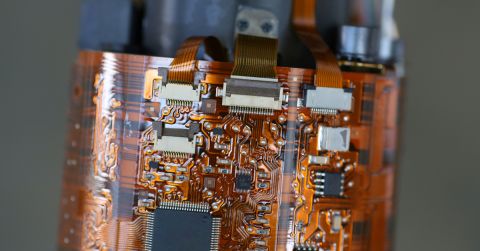

For systems that require extreme performance or operate under strict power and size limitations, custom AI accelerators provide a compelling solution. These accelerators, typically implemented using Application-Specific Integrated Circuits (ASICs) or Field-Programmable Gate Arrays (FPGAs), are designed from the ground up to handle specific AI workloads.

Unlike general-purpose solutions like GPUs or NPUs, custom accelerators can be fine-tuned to execute only the tasks required by a particular AI algorithm. This targeted approach allows engineers to optimize the hardware for both performance and energy efficiency. For example, by focusing on the specific type of matrix multiplication needed for a particular neural network, a custom AI accelerator can significantly reduce both the hardware size and power consumption.

Custom accelerators offer significant benefits in terms of efficiency, but they come at the cost of flexibility. Because these accelerators are designed for specific tasks, they may not be easily adaptable to different AI workloads or future algorithm changes. Engineers must carefully weigh the trade-off between achieving peak performance and maintaining the flexibility to support new use cases.

Optimizing Power and Performance: Efficient Data Representation

Another key strategy for improving the power efficiency of AI in embedded systems is optimizing the way data is represented during computation. Many AI frameworks, such as TensorFlow and PyTorch, typically use 32-bit floating-point numbers for their operations. However, in many cases, AI algorithms can operate effectively with smaller, more efficient data types, such as 16-bit floating-point or fixed-point formats.

Shrinking Data for Better Performance

By reducing the number of bits used to represent each piece of data, engineers can significantly reduce both the size and power requirements of the hardware performing the computation. For example, using a 10-bit fixed-point representation instead of a 32-bit floating-point number allows for far smaller multipliers, reducing hardware size by up to 95%. This reduction in hardware complexity leads to more energy-efficient designs, allowing embedded systems to process AI workloads without exceeding their power budgets.

Smaller data representations also help alleviate another key bottleneck in embedded systems: memory bandwidth. Moving large amounts of data between memory and processing units can slow down computation and waste energy. By reducing the amount of data being transferred, engineers can enhance system efficiency and improve the overall performance of AI algorithms.

High-Level Synthesis: Streamlining AI Hardware Design

While custom hardware accelerators offer substantial advantages in performance and efficiency, designing them from scratch can be a time-consuming and complex process. High-Level Synthesis (HLS) tools have emerged as a valuable solution to this challenge. HLS allows engineers to describe algorithms in high-level programming languages like C++ or Python, which are then automatically converted into hardware descriptions that can be used to generate ASICs or FPGAs.

Simplifying Hardware Development with HLS

HLS significantly reduces development time by enabling engineers to experiment with different architectural configurations and evaluate trade-offs between power, performance, and flexibility. For example, engineers can use HLS to explore different data representations (such as 16-bit vs. 32-bit floating-point) or to test various parallelization techniques before committing to a final hardware design.

Furthermore, HLS tools integrate seamlessly with popular AI frameworks, allowing engineers to test their AI models directly on hardware without needing to manually write hardware code. Open-source projects like HLS4ML are further simplifying this process, making it easier than ever for engineers to translate AI models written in Python into efficient hardware designs.

The Future of AI in Embedded Systems: Customization is Key

As AI continues to expand across industries, the demand for efficient, high-performance AI accelerators will only grow. While general-purpose processors like NPUs and TPUs will suffice for some applications, many embedded systems will require custom hardware solutions to meet the stringent performance, power, and size requirements of real-time, on-device AI processing.

To stay ahead of the curve, engineers must embrace customization—whether through custom AI accelerators, efficient data representations, or high-level synthesis tools. By carefully optimizing every aspect of their designs, from the hardware itself to the way data is processed, engineers can create the next generation of AI-powered embedded systems capable of powering smarter, faster, and more capable devices across a wide range of applications.

Conclusion

Integrating AI into embedded systems is a complex challenge, but with the right combination of hardware acceleration, data optimization, and custom design, engineers can create powerful, efficient solutions. By understanding the trade-offs between offloading computation and on-device processing, embracing custom AI accelerators, and leveraging high-level synthesis, engineers will play a crucial role in shaping the future of AI-driven embedded devices. The key is to strike a balance between performance, power efficiency.