What's in a Voice Recognition Chipset for IoT?

Voice recognition capabilities used to be relegated to cell phones and some high-end computers, but now everything from cars to coffee makers includes voice recognition or voice activation capabilities. Whether you’re developing industrial products that need to detect specific tones in audio samples or you want to shout your air conditioner into overdrive, you’ll need a complete chipset for audio capture and voice recognition.

Voice recognition capabilities used to be defined at the software level alongside a mixed bag of hardware for signal conditioning and processing. The current best-in-class set of affordable voice recognition chipset products integrates many formerly separated functions into a single IC. If you’re looking for powerful voice recognition chipset components for IoT products, take a look at the options below.

What Makes for Successful Voice Recognition?

The answer to this question is about more than selecting a microphone and ADC with the right bandwidth. Both aspects of building out a voice recognition chipset are important, but going beyond simply recording voice data requires some processing steps. After converting captured audio into a digital signal, some DSP tasks must be performed to provide a meaningful user experience.

If you’ve ever listened to a recording of yourself with a studio-quality microphone that was recorded in a typical room, you might notice some artefacts that need to be removed for accurate voice/speech recognition. A certain class of audio DSP ICs, known as far-field ICs, are ideal for removing signal artifacts in preparation of speech recognition. These components provide some important capabilities as part of speech recognition:

- Active gain control: Essentially, this listens for anything that can be classified as a human voice. Once a human voice is identified, the processor increases the gain of the captured signal. Some processors can go a step further and actively modify the gain as more data is captured.

- Beamforming: This requires an array of microphones, which can be used to determine the direction of a sound source by detecting the phase between different converted audio signal. If you’re familiar with phased array antennas, then this is simply its audio analogue, i.e., phased array microphones.

- Reverb and echo suppression: Echo suppression solutions can also be implemented at the hardware level using a microphone array. Strong echo received by a voice recognition chipset can create inaccurate voice recognition, and the chances of echo are greater when the device is larger from the sound source. Algorithms can also be used with single-microphone products to detect delays and suppress delayed signals in the time domain or frequency domain.

- Reference noise filtering: This feature is quite important in vehicles, where some specific source of background noise may be present. Background noise may be present as road/engine noise, the radio, or a siren in the case of an emergency vehicle. Some controllers include reference noise filtering at the hardware level, or this can be integrated with an external processor (e.g., MCU or FPGA).

Once the captured voice signal is pre-processed, words can be detected from speech patterns with algorithms implemented at the hardware or software levels. Without getting too deep into the computational side, the goal in speech recognition is to classify a series of acoustic signatures into one of many words in a large dictionary of words. Simple natural language processing (NLP) models, such as a Naive-Bayes classifier, can provide highly accurate classification as long as the right signal processing steps are performed.

Ideal Chipsets for IoT Products

In theory, any DSP IC, or an MCU and an audio codec IC, could be used as part of a voice recognition chipset. The products shown below are just a few options that are geared towards speech recognition applications.

In order to provide sufficient latency for these pre-processing and classification steps, any DSP IC that performs on-chip classification should provide calculation speeds of at least several MIPS. The classification steps also can take hundreds of thousands of calculations. Standard I/Os (i.e., I2C and GPIO) are also useful for interfacing with other components in your system. You may need an external processor to implement classification and limit your DSP to performing pre-processing steps only. The components below show what is capable from current DSPs and what to expect from upcoming SoCs.

Microchip, DSPIC30F

The DSPIC30F family of signal processors from Microchip was released before voice recognition became a staple in new hardware. This series of DSP ICs was intended for studio-grade digital music production, but Microchip has released a speech recognition library to expand on the available applications with this series of components. Designers can bring this component into some higher end speech recognition applications as this series provides up to 24-bit audio capture at high frequency (30 MIPS).

Example application diagram from the [DSPIC30F datasheet]

Example application diagram from the [DSPIC30F datasheet]

Texas Instruments, OMAP5910JZZG2

The OMAP5910JZZG2 DSP from Texas Instruments is a highly adaptable DSP for a range of applications, including video acceleration, speech recognition, encryption/decryption, and image/video watermarking. This low power device integrates a number of functions directly on-chip, including a host interface, 10 GPIOs, and other peripherals. Although this is an older DSP, it is still a powerful option for pre-processing voice signals and is still in production.

Synaptics, CX20921-21Z

The CX20921-21Z SoC from Synaptics typically finds its place in smart home systems. Designers that want to integrate with Microsoft Cortana or Amazon Alexa will have access to an SDK for embedded application development. This component can be used with 2-microphone or 4-microphone arrays. It captures voice at 24-bit and with 106 dB dynamic range. Available sample rates range from 8 kHz to 96 kHz per microphone channel.

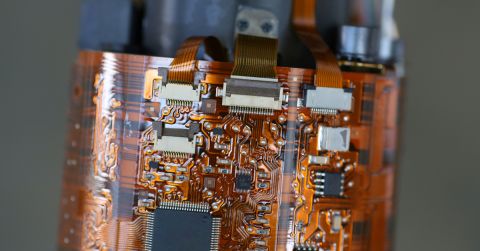

Evaluation board for the CX20921-21Z SoC from Synaptics. From the Synaptics AudioSmart Development Kit.

Evaluation board for the CX20921-21Z SoC from Synaptics. From the Synaptics AudioSmart Development Kit.

The IoT revolution shows no sign of slowing, and newer SoCs that integrate capture, conditioning, processing, and system control will hit the market at full scale soon. When you’re looking for the newest and most advanced voice recognition chipset, you can find the components you need on Octopart.

Stay up-to-date with our latest articles by signing up for our newsletter.

Back

Back