Behind the Scenes with AI Embedded Development

It’s no lie that Artificial Intelligence (AI) has become a game changer in the landscape of software development. One shot generative AI was helpful but introducing thinking models and agentic workflows has completely disrupted the world of code generation. Probably one of the most frequent questions I get from fellow colleagues is how to squeeze as much efficiency out of an AI agent as possible, increasing efficiency by 5-10x.

In this article, we’re going to discuss some simple, yet powerful, techniques that I use throughout my development process when I’m writing code for embedded systems.

AI Embedded Development Background

Perhaps you’ve been noticing quite a few posts on social media lately how people claim to become an overnight success with AI. A lot of us start to wonder what we’re doing wrong and why we can’t achieve 1000x or generate $300k daily using GenAI to create the “best apps in the world.”

First of all, I think most of those posts are gross exaggerations. The exaggeration typically goes something like this, “I recently started using <insert AI app here> and now I make $1 million dollars a month creating apps that everyone loves!” Obviously, I’m exaggerating a bit, but you get the point. If any of it is remotely true, they, most likely, have a business or strong foundation in not only app design but also marketing and selling apps as well.

While we all want to become millionaires overnight (like our friend in the social media posts), it’s a bit unlikely AI will do that for us overnight (or at all). What is likely, however, is if we apply the guidelines and techniques outlined in this article to our development workflow, there’s a good chance we can significantly increase our output and quality of work when on the clock.

AI Embedded Development Principles

We’re going to go over a few principles that I use during the design phase that I’ve found to significantly improve my workflow and end product.

Plan-Only Gate

Before we do any coding with our AI agent, we want to plan together, sparring a bit back and forth until we’ve hashed out a spec. In your prompt, you’ll preface your prompting with: “I have the following spec I’d like to implement. I want to first review the plan together, receive feedback from you, and then plan out the implementation phases once we’re good with the spec. DO NOT write any code until I’ve approved the plan.” This ensures you’ve covered all your bases in terms of design so you don’t get caught off guard when the agent starts to implement something you didn’t ask for.

Natively Compiled Demo Based on HAL

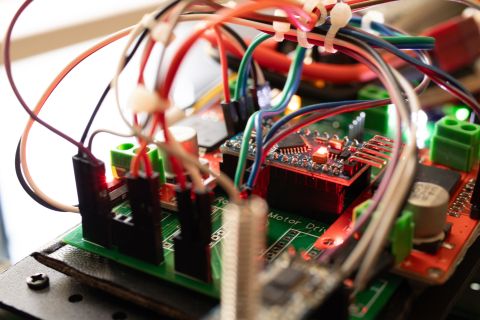

Assuming this project is eventually making its way onto hardware, you’re going to have a very difficult time getting an Agentic workflow to write, test, and validate your embedded code without unfettered access to the device (more on that later). Even if it does have access to the device, you want to minimize the design-test-validate cycle loop time as much as possible. Creating a hardware abstraction layer (HAL) to segregate the “algorithm” from the hardware level calls is a terrific way to get AI to do most of the grunt work for you.

For example, in Self-Organizing MCUs (featured in Letting Your Boards Sort Themselves Out), the majority of the functional code is written completely independent of any hardware device. This allows the LLM to write and test code natively on the operating system that it is running on, not knowing much about how to interact or debug a piece of hardware.

Parallelize Like a PM

Split up your tasks in parallel to the Agent to work even faster. You want to leverage multiple tabs in IDEs such as Cursor, separate windows for research using ChatGPT/Claude, and background agents found with tools such as Codex.

For example, one task could be your algorithm development while two other tasks focus on documentation and tests for other features already implemented. Be the project manager you always wanted to be. Bonus: you get to boss around your “team” as much as you want without them talking back or quitting!

Observability Hooks

While large language models (LLMs) have been trained on the entire internet (and more), their programming prowess is definitely biased towards general purpose programming versus embedded systems. Given that, the debugging capability of a thinking model has a baseline expectation of high level programming languages. While it can think its way through an embedded related bug (i.e. hardware/software combined issue), it’s certainly not going to do as well as debugging an issue in a Python based web application.

In order to overcome this challenge, we must ensure we give the agent proper tools and context to support debugging any issues with our code and/or connected hardware. Running MCPs that compile, upload, and communicate directly with our devices is an absolute must when debugging issues in real time on hardware targets. This means providing mechanisms to communicate with the board in order to get as much telemetry as possible. Peaking and poking as many registers as possible and providing feedback on issues must be a baseline when working in tandem with your agent.

Non-Blocking by Default

When pairing AI workflows with real hardware (MCP or not), it’s critical to add timeouts for any type of operation. This can be something as simple as a serial read over UART or a complex workflow requiring multiple calls to different pieces of hardware. This is something an agent can get completely hung up on and spin its gears forever. You either need to bake these timeout requests into your prompt (e.g. “Time out after 10 seconds when running the following task if you get no response…”) or keep an eye on the terminal that it spawns to ensure the agent isn’t getting hung up.

Conclusion

As of today, pairing with AI to write embedded code is not a one-shot operation like some other languages and frameworks. Working with hardware is tricky for humans and, even more so, for Large Language Models. Given the complexity of developing for Embedded Systems, we discussed a few different principles that I apply to my AI pairing workflow that has helped me tremendously over time. With these tips, you should be able to accelerate your development with higher quality code and runtime results on your embedded targets.

Back

Back