CCD vs. CMOS Sensor Comparison: Which is Best for Imaging?

Should you use a CCD or CMOS sensor in this camera? Here’s how you can compare these sensors.

Should you use a CCD or CMOS sensor in this camera? Here’s how you can compare these sensors.

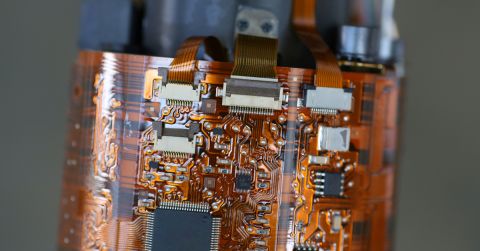

Any design for imaging, computer vision, and photonics applications will need some type of optical assembly and sensor to work properly. Your next optical system will incorporate a broad range of optical components, and imaging sensors are the bridge between the optical and electronic worlds.

Judicious sensor selection requires considering a number of factors. Some of these factors relate to response time, form factor, resolution, and application. The choice between a CCD vs. CMOS sensor can be difficult, but it will determine how fast your system can resolve images while avoiding saturation. If you need to work outside the visible range, you’ll need to consider alternative materials to Si for effective imaging. In some applications, it may make more sense to work with a photodiode array. Here’s what you need to know about these different types of sensors and how to choose the right component for your application.

Imaging Sensor and System Requirements

Any imaging system should be designed to meet some particular requirements, and many of them center around the choice of optical sensor. To get started, consider the material you need for your wavelength range, then compare aspects like resolution, response time, and linearity.

Active Material and Detection Range

The active material used in your sensor will determine the sensitive wavelength range, band-tail losses, and temperature sensitivity. You may be working in the infrared, visible, or UV ranges, depending on your application. For camera systems, you’ll want sensitivity throughout the visible range, unless you’re working on a thermal imaging system. For specialized imaging applications, such as fluorescence imaging, you may be working anywhere from the IR to UV range.

Some active materials are still in the research stage, while some are readily available as commercialized components. If you’re comparing CCD vs. CMOS sensor components, the active material is a good place to start when selecting candidate sensors.

- Si: This is the most common material used in imaging sensors. Its indirect bandgap of 1.1 eV (~1100 nm absorption edge) makes it best suited for visible and NIR wavelengths.

- InGaAs: This III-V material provides IR detection out to ~2600 nm. Its low junction capacitance of <1 nF makes InGaAs sensors ideal for applications at SMF wavelengths (1310 and 1550 nm). Tuning is achieved by altering the stoichiometry of In(1-x)GaxAs. InGaAs CCD sensors are available on the market from photonics suppliers, and companies like IBM have demonstrated InGaAs compatibility with CMOS processes.

- Ge: This material is less common in CCD cameras and sensors due to its higher cost than Si, and all-Ge and SiGe CMOS sensors are still a hot research topic.

There are other materials available for use as image sensors, although these are normally used in photodiodes and are not heavily commercialized. If you’re working in the visible range, Si is the route to take as you’ll have sensitivity at wavelengths from ~400 nm to ~1050 nm. If you’re working deep in the IR range, you’ll want to use InGaAs. Si CCD and CMOS sensors can be used for UV wavelengths, but only when the sensor has a special surface treatment to prevent ablation.

Color filters are often used on CCD and CMOS sensors to form monochrome images or filter specific wavelengths. It is common to see CCDs and CMOS sensors with a sharp-cut glass filter or thin film to remove IR wavelengths.

Color filters are often used on CCD and CMOS sensors to form monochrome images or filter specific wavelengths. It is common to see CCDs and CMOS sensors with a sharp-cut glass filter or thin film to remove IR wavelengths.

Frame Rate, Resolution, and Noise

T he frame rate is determined by the way in which data is read out from the detector. The detector is composed of discrete pixels, and data must be read from the pixels sequentially. The method for reading pixels determines the speed at which images or measurements can be acquired. CMOS sensors use an addressing scheme, where the sensor and each pixel is read individually. In contrast, CCDs use global exposure and read out each column of pixels with a pair of shift registers and an ADC.

Because these sensors use different methods for readout, various sensor modules require different integrated components. This is where the real comparison starts as the integrated electronics will determine noise figures, linearity, responsivity, color depth (number of colors that can be reproduced) and limit of detection. The table below shows a brief comparison of the important imaging metrics for CCD and CMOS sensors.

| | CCD | CMOS | | ---------- | ---------- | ---------- | | Resolution | Up to 100+ Megapixels | Up to 100+ Megapixels | | Frame rate | Best for lower frame rates | Best for higher frame rates | | Noise figure | Lower noise floor → Higher image quality | Higher noise floor → Lower image quality | | Responsivity and linearity | Lower responsivity, broader linear range | Higher responsivity, lower linear range (saturates early) | | Limit of detection | Low (more sensitive at low intensity) | High (less sensitive at low intensity) | | Color depth | Higher (16+ bits is typical for expensive CCDs) | Lower, although becoming comparable to CCDs (12-16 bits is typical) |

What About Photodiodes?

This is a fair question as photodiode arrays can also be used to gather 1D or 2D intensity measurements. It is important to note that a photodiode is the active element in a CCD or CMOS sensor; the three types of sensors differ in the way data is read out from the device. A photodiode array is constructed with a common cathode configuration, so data is read out from the device in parallel. This makes photodiodes faster than CCDs and CMOS sensors. However, the use of two wires per photodiode means that you’ll have a small number of photodiodes in an array; a 100x100 pixel photodiode would have 20,000 electrical leads. One can see how this quickly becomes impractical.

The other option for using photodiodes is to mechanically raster scan the field of view with a laser diode and collect reflected/scattered light. This point measurement approach is used in UAV and automotive lidar systems. You can form a low resolution image in this way, where the frame rate is limited by the scanning rate and averaging time. In this application, CCD and CMOS sensors still win thanks to their higher resolution and similar frame rates.

Example of a raster scanned lidar image for an autonomous vehicle. Note the bus on the right side of the image. Image credit: Baraja.

Example of a raster scanned lidar image for an autonomous vehicle. Note the bus on the right side of the image. Image credit: Baraja.

Finding and vetting CCD vs. CMOS sensor arrays are critical steps in imaging systems design. You can find these components and many more on Octopart.

Stay up-to-date with our latest articles by signing up for our newsletter.

Back

Back