Can You Hear Me Now?

Depending on your background and personal experiences, two things may spring to mind when you hear the term “cocktail party problem.” The first is you thinking, “there are never enough cocktails to satisfy everyone’s requirements,” but maybe I’m projecting again.

Alternatively, you may be considering how difficult it is for an artificial intelligence (AI)-enabled voice-controlled assistant like Amazon’s Alexa to follow individual voices when a bunch of people are talking at the same time, such as a gaggle of folks attending a cocktail party.

This is one of those things that humans are particularly good at. As the Wikipedia notes, “The cocktail party effect is the phenomenon of the brain's ability to focus one's auditory attention on a particular stimulus while filtering out a range of other stimuli, as when a partygoer can focus on a single conversation in a noisy room. Listeners have the ability to both segregate different stimuli into different streams, and subsequently decide which streams are most pertinent to them [...] This effect is what allows most people to ‘tune into’ a single voice and ‘tune out’ all others.”

As Bill Bryson says about hearing in The Body: A Guide for Occupants, “Hearing is another seriously underrated miracle […] you can not only hear someone across the room speak your name at a cocktail party but can turn your head and identify the speaker with uncanny accuracy. Your forebears spend eons as prey to endow you with this benefit [...] A pressure wave that moves the eardrum by less than the width of an atom will activate the ossicles and reach the brain as sound [...] From the quietest detectable sound to the loudest is a range of about a million million times of amplitude.”

All of which brings us to those clever chaps and chapesses at the fabless semiconductor company XMOS, which hails from the UK. These guys and gals are at the forefront of far-field voice interface technology. At the core of their offerings (no pun intended) is their xCORE architecture, which boasts multiple deterministic processor cores that can execute multiple tasks simultaneously and independently – all connected via a high-speed switch.

Each processor tile is a conventional RISC processor that can execute up to eight tasks concurrently. Tasks can communicate with each other over channels (that can connect to tasks on the local tile, or to tasks on remote tiles), or using memory (within a tile only). This architecture delivers, in hardware, many of the elements that are usually seen in a real-time operating system (RTOS), including task scheduling, timers, I/O operations, and channel communication. By eliminating sources of timing uncertainty (interrupts, caches, buses, and other shared resources), the xCORE architecture can provide deterministic and predictable performance. Furthermore, tasks can typically respond in nanoseconds to events such as timers or transitions on external inputs. This makes it possible to program xCORE devices to perform hard real-time tasks that would otherwise require dedicated hardware.

In 2017, XMOS acquired Setem Technologies, whose scientists and engineers are the pioneers of Advanced Blind Source Signal Separation technology. When running on an xCORE device, Setem’s patented algorithms can be used to focus on a specific voice or conversation within a crowed audio environment.

This is actually far more powerful than you might initially suppose. Using this technology, it’s possible to take a soundscape that involves multiple people talking – along with “noise” sources like air conditioners, water running out of a tap, radios, and television sets – and disassemble this soundscape into its individual elements. Furthermore, we’re not talking about the ability to home in on a single voice, but to identify and isolate all of the voices and to monitor them and track their owners as they move around the room in real time.

It’s important to note that the folks XMOS don’t make products themselves. What they do is to provide the chips and algorithms that allow their customers to create devices and systems that could change the world. For example, I can easily imagine an intelligence agency placing an Alexa-like microphone array in a room, where this array is powered by a xCORE device running Setem algorithms. Assuming a video camera is also installed in the room, a remote operator could use a mouse to click on one or more people in the room and hear only what the selected people are saying to each other.

I’m a firm believer that the combination of mixed reality (MR) headsets and artificial intelligence (AI) are going to dramatically change the ways in which we interact with the world, our systems, and each other (see also What the FAQ are VR, MR, AR, DR, AV, and HR? and What the FAQ are AI, ANNs, ML, DL, and DNNs?). I can easily imagine having XMOS technology integrated into one’s MR headset, with the AI determining with whom you ate talking, fading down other voices and extraneous sounds, and leaving you to enjoy a crystal-clear conversation in the nosiest of environments. Sad to relate, I can also easily envisage people using this technology to eavesdrop on the conversations of others.

But let us “accentuate the positive and eliminate the negative,” as espoused by the popular song Ac-Cent-Tchu-Ate the Positive from 1944 (the music was written by Harold Arlen; the lyrics by Johnny Mercer). On the one hand, I’m tremendously impressed by the capabilities of my Alexa with its 7-microphone array, and I can only imagine how much better things would be if this little scamp was equipped with XMOS technology.

On the other hand, I can’t help but feel that flaunting seven microphones is a little on the extravagant side. I personally manage to get by surprisingly well with only two ears, so why can’t our voice-enabled assistants do likewise?

Perhaps they will be able to do so in the not-so-distant future. In the summer of 2019, the folks at XMOS introduced their next-generation XVF3510 voice processor that can pluck an individual voice out of a crowded audio landscape using just two microphones.

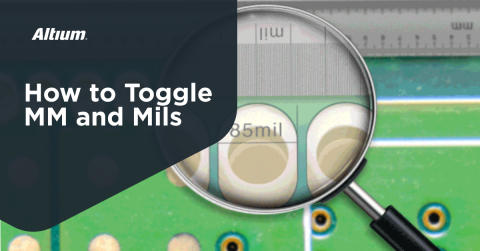

XVF3510-based VocalFusion development kit for Amazon AVS (Source: XMOS)

Your first reaction may be that this looks like a lot of “stuff,” but remember that this is a development kit; the XVF3510 is the small chip in the center of the larger board on the right. The small thin board at the extreme right carries a 2-microphone array. The big board assembly at the left is a Raspberry Pi and HAT. When it was launched, the XVF3510 cost only $0.99 a chip for orders over 1 million units a year (prices started at $1.39 for smaller quantities), but that was six months ago, which is a lifetime in terms of electronic products these days.

I find myself with mixed emotions. I’m excited by a future in which I can stroll around making things happen by simply talking, but I’m also a tad apprehensive at the thought of every device listening to what I say, with the possibility of careless utterances coming back to haunt me down the road. How about you? Are you thrilled or trepidatious as to what the future of a speech-enabled world holds?