How Embedded Machine Learning Applications Will Benefit From 5G and the Cloud

Editorial credit: Anton_Ivanov / Shutterstock.com

When I was studying late at night in college I often wished I had a cybernetic brain implant. Then I could just download any information I needed and instantly recall it later. No more studying, no more forgetting my girlfriend’s birthday, maybe I could even watch Netflix inside my own head. Sadly the illustrious electrical engineers who came before me designed Internet of Things (IoT) devices instead. While I’m still waiting on my machine memory, those same devices are getting minds of their own. Machine learning and artificial intelligence (AI) are hot topics, and designers like yourself are probably looking to implement them in embedded systems. There’s only one problem, the neural networks used for machine learning guzzle too much energy and need too much processing power. The arrival of 5G and its combination with cloud computing may provide a solution to that conundrum. Cloud computing can empower embedded systems with artificial intelligence using 5G’s high bandwidth and low latency.

Machine Learning in Embedded Systems

Machine learning is not a new concept, but continuing advances in processing power are making it a reality. AI will allow gadgets to interact with their environments much more intelligently.

The Synthetic Sensor made by Future Interfaces Group is a great example of how machine learning can improve system operation. This module includes almost all of the most sensors found on “smart” devices like ambient temperature, EMI, and noise. Then it uses machine learning to understand its surroundings. The Synthetic Sensor can tell which burner on the stove you turn on or identify which appliance is running. This allows users to know exactly what’s going on in their home when they’re away.

The advantages of intelligent sensing are huge for embedded systems. They’re already being put into use by some devices. The Nest thermostat learns what temperature you like your house at and tracks your movements. Accordingly, it adjusts the temperature in your house based on its understanding of your preferences and schedule. Hot when you’re home, cold when you’re gone. This kind of understanding and scheduling could make homes more energy efficient.

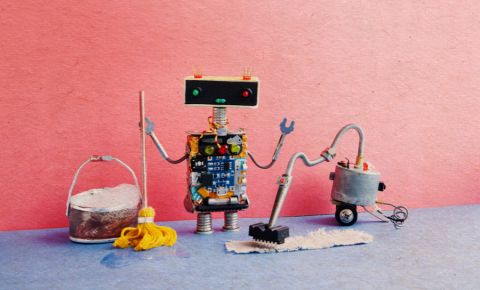

Don’t embed AI systems into your flesh, please.

Barriers to Machine Learning

If machine learning is so great, why haven’t we implemented it everywhere yet? For the same reasons why I don’t have a bionic brain; it’s too bulky and requires too much energy.

It’s not exactly easy to fit a supercomputer in an embedded system, but that’s what you’ll have to do if you want AI. Machine learning takes a humongous amount of processing power, more than is prudent to put in an embedded system. Even advanced driver assistance system (ADAS) enabled car manufacturers are worried about being able to fit the CPUs required for intelligent systems. Developers are now using GPUs to speed up machine learning processes, but those won’t get chips down to the size you need.

Even when appropriate chips are available, you’ll still have to deal with AI’s power requirements. In 1989 some researchers at Carnegie Melon made a car that drove itself using deep learning techniques. The car’s name was ALVINN, and its 100 MFLOP CPU was powered by a 5,000 W generator. Current chips use less power than that, but not significantly less. For large embedded systems, like an ADAS enabled car, you might have space for lots of batteries; however, for appliances and handhelds, you would need a battery larger than your device.

These are both difficult issues but eventually, we’ll overcome them. However, a shorter term solution for bringing AI to embedded systems is 5G and cloud computing.

Cloud Computer and 5G

The Cloud, what a mysterious thing. I like to picture it as the world of Tron, though hopefully with less conflict. When combined with 5G, cloud computing could be smart enough to help me create my own computer world.

Cloud computing can solve the aforementioned power and processing limitations. Calculations can be run at an external location with effectively infinite computing power and a grid connection. People are already using the Cloud for distributed computing, so there’s no reason that embedded systems shouldn’t do the same. In accordance with this, Google, Amazon, and others have begun offering cloud machine learning services. With your processors off in some far away computer farm, you only have to worry about powering an antenna. This brings me to 5G.

Machine learning requires a lot of data, data that is currently difficult to transmit wirelessly. Intel estimates that ADAS enabled cars will need to process 1 GB of data per second. That’s a lot of information to be transmitted over a WiFi or 4G connection. It just so happens that 5G will support data rates up to 10 Gbps with latencies under 10 ms. This means that your device will be able to transfer the required data and almost instantly receive an interpretation. Companies are also working on low power 5G antennas so that your boards can make smarter decisions using less electricity.

This is exactly how machine learning with cloud computing would look.

Machine learning is an exciting field that will hugely enhance embedded systems. Cloud computing can address the AI processing and electrical requirements and 5G will solve the data transfer requirements.

Once machine learning becomes viable for embedded systems, you’ll need something like an android to help you with all the design work. Well, I don’t have an android for you, but I have the next best thing. Altium Designer® PCB design software will help you design boards for any kind of embedded system. Its wide range of excellent tools will make you feel like a superhuman PCB.

Have more questions about machine learning and embedded systems? Call an expert at Altium.