Components Behind Today’s Breakthrough Smart and AR Glasses

Glasses are emerging as the newest personal device form factor, merging fashion, function, and connectivity into one sleek package. According to IDC, shipments of smart and augmented reality (AR) eyewear are expected to grow 39% in 2025, reaching 14 million units and rising to 43 million in 2029.

The market today is currently being driven by smart glasses (aka AI glasses), with 250% growth in 2025, yet next-gen AR glasses are right around the corner. From Meta’s camera- and AI-enabled Ray-Bans to XREAL’s lightweight AR display glasses, the category is moving into daily use for millions.

Key Takeaways

- Smart and AR glasses are accelerating toward mainstream adoption, with global revenue forecasts topping $25–30 billion by 2030.

- Advancements in displays, sensors, and power systems are driving innovation in camera and AI-based smart eyewear and the evolution to lightweight AR designs with true visual overlays.

- Breakthroughs from suppliers like Sony, Qualcomm, Bosch, and Renesas are making glasses lighter, more comfortable, and more capable, turning eyewear into the next frontier of personal tech.

The Technology Inside the Frame

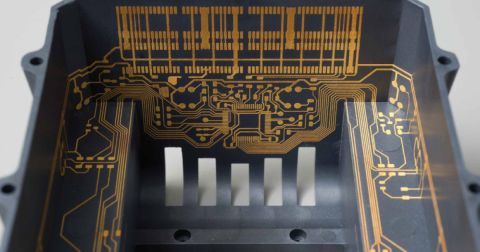

Driving this growth is a cascade of component breakthroughs. Miniature cameras and MEMS microphones, micro-OLED displays, precision IMUs, and energy-dense batteries have all been engineered to fit inside a frame that weighs no more than a pair of everyday, stylish glasses.

Each new product represents a sophisticated balancing act of optics, thermals, connectivity, and style. The result is eyewear that looks familiar yet delivers the kind of computing capabilities once reserved for powerful smartphones. Today’s smart and AR glasses show how far component innovation has come, and it’s easy to imagine how much further it can and will go.

Smart Glasses Go Mainstream

The current generation of smart glasses favors communication, AI assistance, and hands-free convenience over augmented visuals. Instead of projecting graphics, they capture images and video, stream audio, and connect to AI assistants in real time. Inside those slim frames is an impressive collection of parts.

Ray-Ban Meta glasses, for instance, run on Qualcomm’s Snapdragon AR1 Gen 1 chipset, which is custom silicon optimized for compact camera processing and low-latency connectivity. In addition, these market-leading smart glasses utilize a range of impressive components:

- SoC + ISP: the AR1’s dual image-signal processors handle 12 MP photos and 6 MP videos directly on-device, minimizing heat and lag.

- Audio subsystem: open-ear micro-speakers and beam-forming Knowles MEMS microphones – like the SPH1644LM4H1 – deliver clear, hands-free calls.

- Motion sensing: a Bosch BMI270 IMU tracks head movement for stabilization and gesture triggers.

- Battery and fuel gauge: a single-cell Li-ion battery paired with TI’s bq27441 delivers accurate runtime prediction.

Designers have also solved key comfort issues: weight distribution, passive cooling, and transparent acoustic channels. These advances have made smart glasses lightweight, fashionable, functional, and practical to wear in public.

Bringing the View to Life: Displays and Optics

While camera and AI-based smart glasses are entering the mainstream today, the next frontier is visual augmentation – AR glasses that project a true digital image into your field of view. Among consumer devices, the XREAL One Pro AR glasses have become a reference design for what’s currently possible. They use dual Sony 0.55-inch micro-OLED panels running at 1080p/120 Hz, achieving a 57-degree field of view with vivid contrast and brightness up to 700 nits. The company’s flat-prism optical engine efficiently folds light, allowing it to fit within a slim frame.

Micro-OLED displays remain the workhorse of modern AR glasses. They deliver rich color, excellent contrast, and manageable thermal output, which are all critical for a device that sits millimeters from the user’s eyes. Their downside is brightness; at full intensity, they still struggle against direct sunlight.

To overcome that limit and achieve outdoor functionality, developers are turning to waveguide optics. These are the clear light-guiding layers that bend projected images into the wearer's view. Two main approaches dominate:

- Reflective waveguides (Lumus, SCHOTT) use tiny mirrors to redirect light with high efficiency, making them bright enough for outdoor use.

- Diffractive waveguides (Dispelix, DigiLens) use nanostructured gratings to combine red, green, and blue light into a single thin sheet. The result is streamlined, lightweight frames.

Both have trade-offs. Reflective types offer brightness and low “eye-glow” but add a few millimeters of thickness; diffractive types are thinner but can lose color uniformity and efficiency. Meanwhile, micro-LED microdisplays – like JBD’s MicroLED AMμLED™ 0.13 Series MIPI microdisplay – promise sunlight-readable AR once production scales in 2026. This microdisplay delivers an extraordinary 6,350 pixels per inch (PPI), positioning it among the world’s smallest and brightest microdisplays.

Compute and Sensing: The Brain and the Eyes

As displays improve, the compute backbone must keep up. Many high-end AR systems now rely on Qualcomm’s XR2 Gen 2 and XR2+ Gen 2 chipsets, which are designed to handle up to ten concurrent cameras and real-time spatial mapping.

Around these processors is a growing constellation of sensors:

- IMUs such as the ST ISM330IS manage motion tracking and stabilization.

- Sensors like Sony’s IMX560 SPAD ToF, paired with ams-OSRAM VCSEL illumination for depth sensing, are used to map surroundings.

- Cameras and image sensor modules such as the 5 MP OmniVision OV716 CIS handle world-facing capture, eye tracking, and gesture recognition.

A single pair of AR glasses may now integrate more than eight sensors, all feeding a synchronized data stream that must be fused within milliseconds. Latency, not raw processing speed, determines how natural the experience feels. That challenge has pushed SoC vendors to integrate dedicated sensor hubs and AI cores, blurring the line between computation and sensing/perception.

Power, Thermals, and Connectivity

No matter how capable the optics or processors, power management still dictates how long these devices can run and how comfortable they feel. Most designs use a single-cell Li-ion battery split between the two temple arms, connected by a flexible PCB ribbon.

To move heat away from the temple, engineers rely on Panasonic PGS graphite sheets and GraphiteTIM pads (like EYGS182307), which conduct heat laterally through the frame rather than allowing it to build up near the temple. The difference between 38°C and 43°C at skin contact is the difference between wearable and unwearable.

Connectivity modules add their own complexity. Murata Type 2FY and 2EA modules combine Wi-Fi 6/7 and Bluetooth LE in compact, shielded packages. Companion controllers, such as the Renesas DA1470x BLE SoCs, manage user inputs, voice activation, and power states without waking the main processor. Even antenna placement is a careful dance, sharing space with speakers, microphones, and camera apertures in just a few millimeters of plastic.

The Next Wave of Everyday AR

The line between smart glasses and AR glasses is starting to blur. SCHOTT’s Geometric Reflective Waveguides production ramped up in 2025, and the recent release of Meta’s new display-enabled Ray-Bans both signal that consumer AR is nearly ready for prime time. The earliest models will emphasize glanceable data – including captions, navigation, and notifications – rather than full video or 3D overlays, keeping power budgets realistic.

As optical efficiency improves and battery chemistry advances, glasses will offer longer wear time and better outdoor readability without sacrificing design. What’s striking is how incremental these breakthroughs are: thinner graphite spreaders, brighter microdisplays, and slightly smarter PMICs.

For hardware designers, this convergence means more cross-disciplinary work: optics engineers collaborating with PCB layout teams, firmware developers tuning power profiles for comfort, sourcing managers tracking waveguide suppliers like they once tracked GPUs.

Notes from the Field: Wearing the Future

After months of regular use, these devices have become part of my daily routine. I wear my Meta Ray-Ban AI glasses most days, walking around town with an audiobook or music playing through open-ear speakers. No earbuds stuck in my ears or visible to others, no disconnection from the world around me. When a WhatsApp alert pings, the glasses whisper the message into my ear, hands-free, while I stay focused on the street ahead or the shopkeeper I'm chatting with. When I have a question, I ask quietly, as if talking to myself or an AI friend in my head, and I get an answer instantly. It feels like the future.

The glasses look like stylish everyday frames, so people don't notice I'm interacting with tech, and it feels like I'm operating under the radar.

When on long flights, I switch to my XREAL One Pro AR glasses. Plugged into my phone or laptop via USB-C, they display a large-screen micro-OLED view right in front of me – so, instead of feeling like I’m in a cramped airplane cabin, I feel like I’m in a spacious, private large cinema. This, coupled with noise-canceling headphones, makes a long flight in economy much more bearable.

These personal experiences underscore the central technological achievement here: the hardware has finally become small, cool, and efficient enough to disappear into everyday frames, turning what once required cumbersome headsets into something as simple as putting on glasses.

A New Form Factor That Feels Inevitable

Smart and AR glasses mark the next phase of personal computing: ambient, hands-free, and seamlessly integrated into daily life. Instead of pulling out a device to interact with information, we're living alongside it: asking questions aloud, receiving answers in our ear, seeing the world augmented in real time. As components get smaller, smarter, and more efficient, glasses are proving that the most powerful technology is the kind that is present when needed and invisible when not.

Whether you’re building reliable power electronics or advanced digital systems for the next wave of smart and AR devices, Altium Develop brings every discipline together in one collaborative environment, free from silos and limits. Experience Altium Develop today to see how a unified platform helps engineers, designers, and innovators co-create without constraints.