Optical PHY PCB Layout for 100 Gigabit and Faster Ethernet

When you're operating in a data center environment, everything eventually gets to fiber for long-reach, high-data-rate transfers between servers and networking equipment. Optical transceiver modules and their input data lines operate at very high signal bandwidths that create major challenges for high-speed designers in terms of layout, routing, and signal integrity. These systems have progessed to 100G levels per lane with aggregated data rates reaching 800G or 1.6T over multiple lanes.

Switches and optical PHYs operating at these data rates will extend to 224G data rates with PAM-4 signaling format, bringing the required channel bandwidth to 56 GHz per lane. These bandwidths are pushing traditional copper interconnects required to reach the PHY layer and an optical module to their limit. In this article, I'll run over the important guidelines for working with an optical PHY that would be found in a modern network switch, the layout topology, and how to deal with power in these components.

Challenges in Optical PHY Layout and Routing

The graphic below shows the high-level topology of a multi-lane Ethernet interface that would be found in networking equipment. This architecture shows the three fundamental parts of an Ethernet interface: the MAC layer embedded in a host processor, the PHY interface, and the module connecting to the physical medium providing data transmission (in this case fiber).

Here the difference between a copper-based interface and a fiber-based interface happens at the module and between the MAC/PHY layers. The module connects to some type of SFP connector and essentially interfaces between the copper and fiber. Modulation applied here limits the required channel bandwidth at 28 GHz or 56 GHz per lane, depending on the modulation format and data rate. Routing between the host/controller, PHY, and optical transceiver module is accomplished with differential pairs.

The topology above applies across multiple data rates, spanning long-distance transfer at 1 Gbps in multiple lanes, up to 800G Ethernet relying on multiple 100 Gbps lanes to a fiber transceiver. Currently, in 2023, the industry is moving to 224 Gbps signaling over copper with 56 GHz bandwidths for multiple applications, including between optical modules and high data rate sources. This pushes interconnect design challenges to mmWave levels that also create challenges for power integrity.

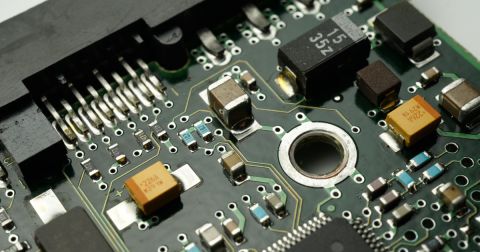

PHY BGA Package and Footprint

PHY components used for interfacing with optical links are available as high pin count BGAs with typical pitch of around 0.8 mm. Components with integrate multiple lanes in a single package will have anywhere from 500 to 1000 pins. Many of these will be VSS (ground). The placement of the TX and RX pins in the ballout should be examined first as this will drive the routing strategy into the component.

The image below shows a portion of the ballout on these components. The reason for showing this portion of the ballout is to illustrate where the Rx/Tx pairs come into the component.

The example ballout shown above is for a 16-lane PHY running at 56G max (NRZ or PAM-4, only lanes 12-15 are shown). There are a few important points to notice in this package:

- Most of the pins are VSS (ground); this is common in high-ball-count switch controllers and PHYs for networking equipment

- The P and N sides of each lane are not next to each other on the outside row/column (red boxes)

- Some portions of the interface are in the interior of the package (blue boxes)

- Each P and N pair is separated from other pairs by the ground vias

How should you create a fanout strategy to route into these components? This is one of the most difficult parts of operating at these data rates because the fanout and escape routing are where most of the signal integrity problems will arise. To start, think about the stackup needed to support these designs and select the layer thicknesses to aid fanout.

PCB Laminate Materials and Layer Thickness

The laminate materials used in these systems have predominantly been Megtron 7 or a newer advanced laminate, such as Tachyon 100G from Isola. These laminates offer Dk between 3 and 3.35, as well as low Df from 0.0012 to 0.0017. These values are sufficient to enable stripline routing required to reach the inner balls on the component packages. We generally do not target something like an unreinforced Rogers (PTFE) material because of the "wet noodle" problem; these unreinforced laminates (when uncured) are difficult to handle during production. When the layers are thin, the laminate will be more pliable and there could be layer-to-layer misregistration.

Go back and take a look at the BGA ballout above; typical pitch values of <1 mm and the number of pins can demand an HDI stackup with thin buildup layers, typically a Type III stackup. For example, in designs I have worked on we have used the following stackup parameters supporting the 100G lanes:

- 3 to 6 mil layer thickness (depending on stripline vs, microstrip routing)

- 4 to 6 mil holes for blind vias

Therefore, we prefer thin layers on low-loss spread glass reinforced materials to support routing. Although these PHY packages can have high pin count, the layer count does not need to be extremely high because most of the pins are VSS. However, you will have to choose the outer layer thicknesses and Dk values that support routing into the inner balls.

Example: Routing from the PHY BGA

Just how optimized is this ballout for 100G data rates? As we will see from some simulation data below, the 0.8 mm ball spacing is already a well-optimized for 100G routing with blind vias and appropriate spacing to ground.

We have two options for routing into the PHY shown above:

- Route both traces directly into the P and N balls on the outer 2 rows, route inner rows as differential striplines

- Route all traces as differential striplines, and route up to the package through blind vias

Option #1 will require a stackup with 2 extra layers compared to Option #2. Based on past projects working with these modules, I would recommend taking approach #2 with ground fill on the top layer to connect all ground pins together. You can then stack 2x microvias to L3 to hit the next ground plane. This would ensure there is a clear return path for those layer transitions and would nicely stitch together the planes on L1 and L3.

Suppose we have a PHY with in a 0.8 mm pitch BGA package. Our example stackup that could support this component is shown below; the red boxes outline two layers that could be used to route into the outer and inner rows of the PHY.

If we place the escape routing on L2, we could use 4 mil microvia-in-pad. applying an appropriate annular ring would leave approximately 20 mil for routing between the inner layer lands on the microvias. That is plenty of room to route a differential stripline pair between microvias on L2.

This example routing would look like the traces shown below. The interleaved ground pour on the VSS pins would sit on the top layer, and this is connected to GND L3 on the inner rows with stacked blind/buried microvias. We prefer to keep the traces in the pair together because we want to minimize return loss at the input of this differential pair. This is one of the biggest factors that will inhibit channel compliance at very high data rates, such as the 224 Gbps targets being implemented in these systems.

For the via portion, blind vias on the differential signals are preferrable because they create less impedance deviation and return loss at high data rates. This is because they create lower input impedance deviation looking into the differential channel. As long as they are not stacked too high, they will be appropriate for 224 Gbps PAM-4 lanes.

The Simbeor simulation result below shows routing through differential blind vias on 4 mil laminates (spanning L1-L2 in the stackup above) into the BGA and the destination optical module. This channel would be compliant with 224 Gbps PAM-4 signaling; the bandwidth limit is shown with the dashed red line. Technically, this structure could support up to 280 Gbps PAM-4 signaling (70 GHz Nyquist).

Routing to the Fiber Module

Once you have a BGA breakout that does not kill your signal integrity, you will have no problem bringing channels out to the optical modules. Simply design to the required differential impedance, minimize turns and length tuning, There will be another via transition into the SFP connector for the optical module, but that via transition can be mirrored from the BGA breakout via transition so that the signal can pass into the fiber transceiver. This concludes the design for this link. One final mixed-mode S-parameter simulation will be needed to

Options for Power Integrity

Handling power integrity in these systems is challenging considering the power regulator topology listed above. The power distribution strategy needed in these systems depends on whether the MAC layer is embedded into a host processor (CPU/FPGA or ASIC), or if you are only designing a network switch. The PHY and MAC may require a regulator that implements adaptive voltage scaling (AVS), which is not something that is used in typical CPU or FPGA hosts.

- CPU/FPGA host with embedded MAC, external PHY:

- Needs specialized multiphase, multilevel VRMs for each rail on the host

- PHY has its own regulator with AVS

- Network switch controller host, external PHY:

- Controller and PHY can share a regulator with AVS

If you look at a typical ballout, there will be separate rail connections for the main logic power, Tx/Rx power, PLL, and low-speed buses. Some guidelines in datasheets and application notes will recommend using a ferrite to isolate the lower-power or more sensitive PLL rails, as I've discussed in another blog on ferrites in a PDN. Applying this type of isolation is a controversial practice that produces mixed results. Be careful with power guidelines in these systems and instead focus on capacitive decoupling to provide power for fast bitstreams produced by these components.

Whenever you are ready to add a fiber optic PHY and transceiver to your PCB, make sure you use the circuit design and PCB layout tools you need in Altium Designer®. Altium 365™ delivers an unprecedented amount of integration to the electronics industry until now relegated to the world of software development, allowing designers to work from home and reach unprecedented levels of efficiency.

We have only scratched the surface of what’s possible with Altium Designer on Altium 365. Start your free trial of Altium Designer + Altium 365 today.